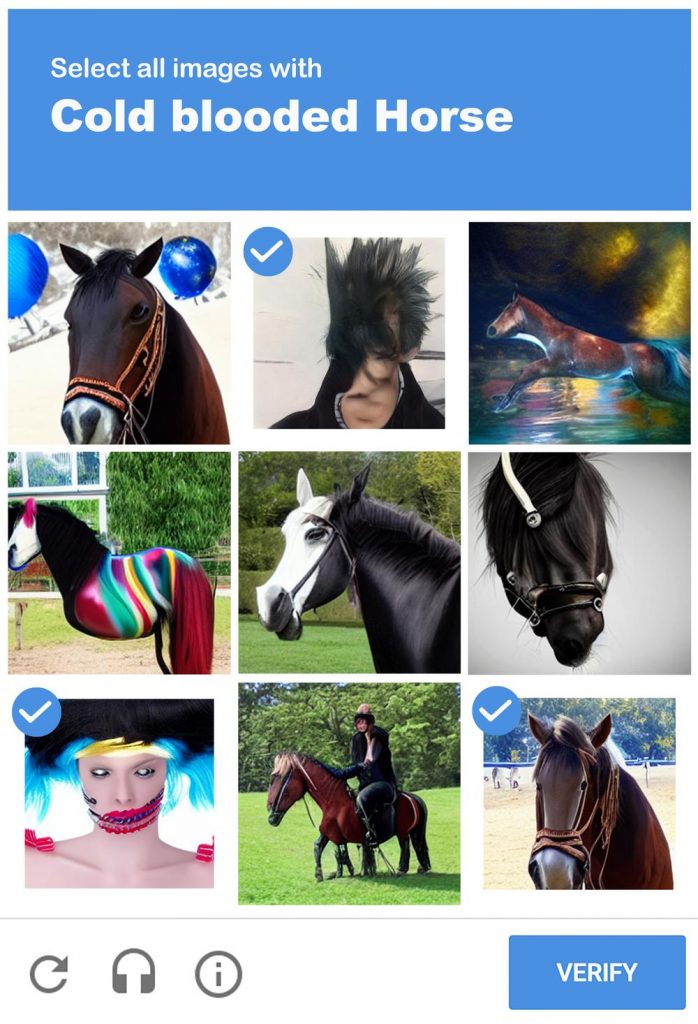

CAPTCHA验证码测试通过生成非人类智能,即 “机器人 “或计算机程序难以判断的图像来确定用户是否为人类。我们假设我们在爱、同情和持有道德的能力方面与人工智能不同,但事实上,我们只是更善于识别图片中的交通灯、校车和消防栓而被系统判定为人类。网络上机器生成的图片数量逐渐增多,是不是人类最终无法理解机器人图片的逻辑和智能?

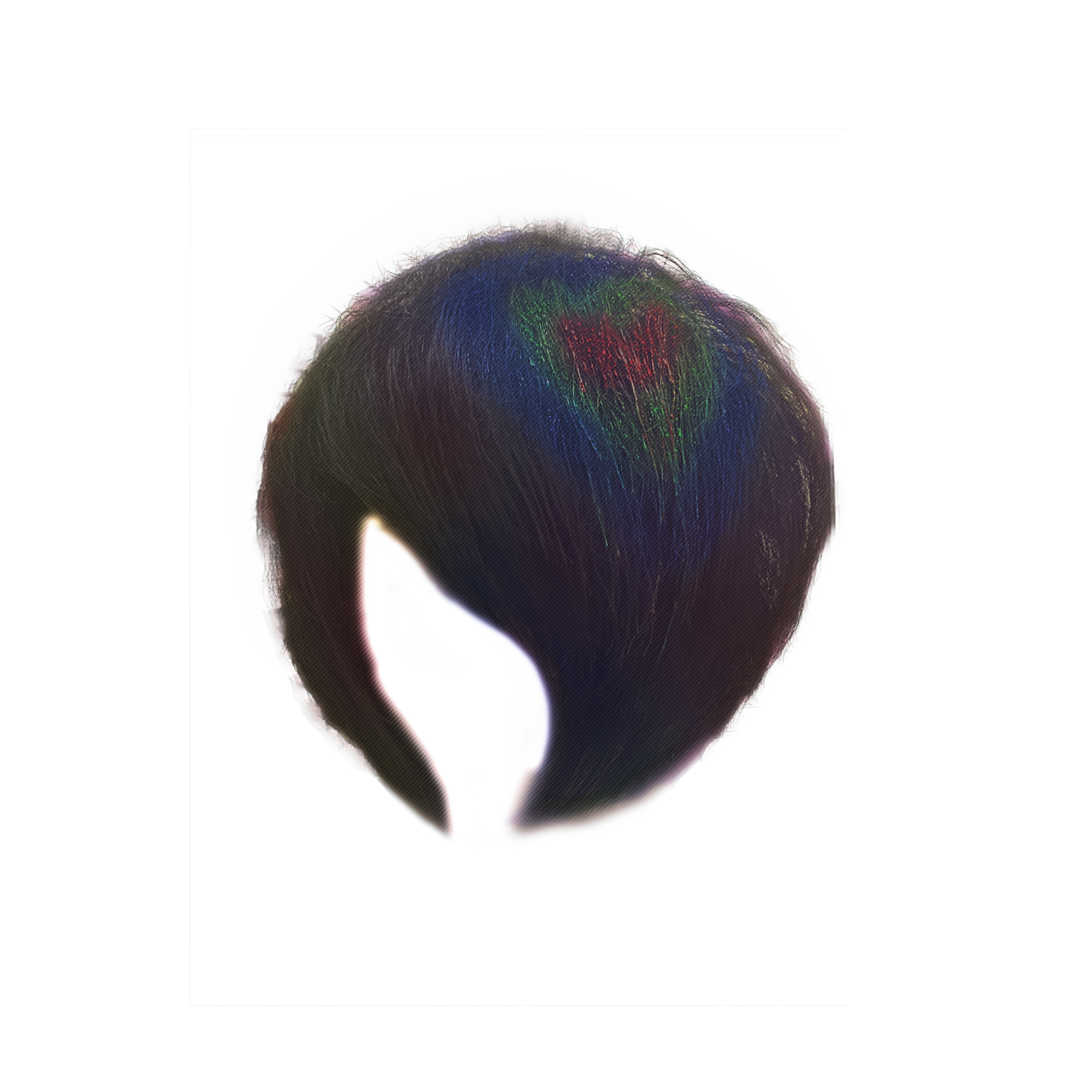

杀马特(smart又有智能人之意)被“反杀联盟”的人从他们在网络上的避难所中过滤掉了。反杀族通过假借杀马特的身份进入团体,一旦进入,通过自动发送垃圾邮件、封锁账户和一般的持续滥用,反智能人就会破坏这些空间,把智能人赶出网络社区。为了安全起见,杀马特剪掉了头发。

@BeYourOwnMastr机器人旨在污染被过滤的空间。杀马特被过滤掉了,在现实生活和网上都是如此。人类未能正确调用@beYourOwnMastr僵尸号使用文字和图片生成模型来访问数据集并重新连接到杀马特的痕迹。通过Twitter,该僵尸号将在公共领域重新发布杀马特的声音。项目的文本部分与凯特-罗杰合作。

Funa Ye + Kate Roger

The CAPTCHA test determines whether a user is human by generating images that are difficult for non-human intelligence, i.e. ‘bots’ or computer programs, to determine. We assume we are different from robots in our ability to love, empathise and hold morals, but are we only known by the system for being better at recognising traffic lights, school buses and fire hydrants in pictures? The number of machine-generated images is gradually increasing on the web, does it follow that humans may not be able to understand the logic of a robot’s images?

The Smart were filtered out from their places of refuge on the web by the anti-Smart. The anti-Smart gained access to groups through falsely identifying as the Smart themselves, and once in, through auto-spamming, account blocking and general consistent abuse, the anti-Smart corrupted the spaces, driving the Smart from the online communities.

The abuse was maintained off-line through physical attacks and anti-Smart propaganda. The Smart, for their safety, cut their hair and took to ‘flat’ hairstyles, or wigs that they could remove if needed.︎︎

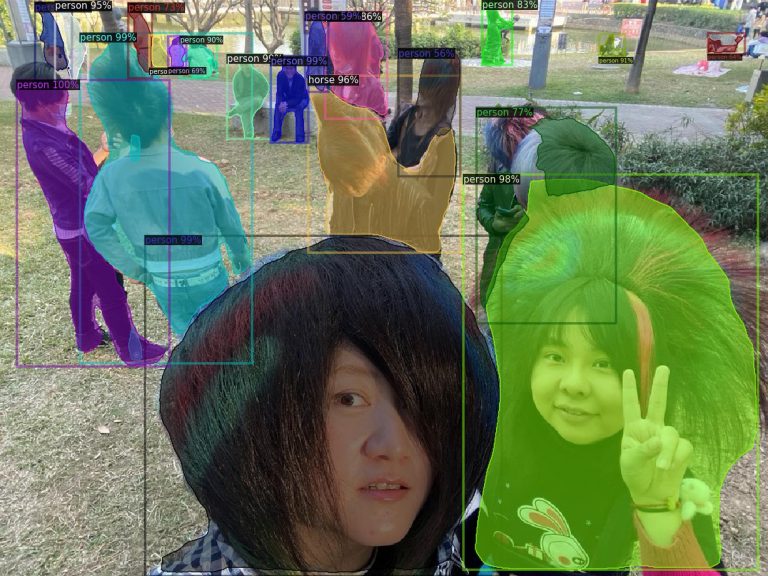

@beYourOwnMastr bot aims to contaminate the filtered space. The Smart were filtered out, in real life and online. Humans failed to recognise correctly. @beYourOwnMastr bot uses generative models to access data sets and reconnect to traces of Smart. Through Twitter, the bot will republicise Smart voices in the public domain.

Some accompanying text about the twitter feed

beYourOwnMastr bots’ content is the output from the GPT-2 language model trained on a dataset of Smart interview transcriptions by Ye and Rogers. This output is interspersed with scripts translated by hwcha Martian to Chinese translator, DeepL Translate, Google translate and edited by Funa Ye and Kate Rogers.

Text to image generation via generative adversarial networks through Sudowrite, wenxin Yige, Dall-e and RunwayML.

Sudowrite bases its responses on OpenAI trained NLP model GPT-3 and extra training from scraped ‘large parts’ of the internet. DeepL uses artificial neural networks trained using the supervised training method on “many millions of translated texts” found by specially trained crawlers. They do not disclose what models they use. Google Translate explains its process as statistical machine translation, trained on millions of documents that have already been translated by human translators and sourced from organizations like the UN and websites from all around the world. Language models trained through RunwayML use as their base the OpenAI trained NLP model GPT-2.